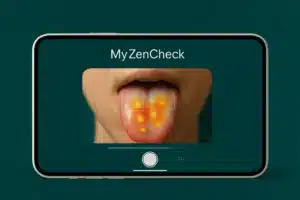

What is the AI Tongue Diagnosis Engine—and why these updates matter

At MyZenCheck, our AI Tongue Diagnosis Engine helps users capture a clear tongue photo, analyze key features (shape, coating, color, and anomalies), and translate those findings into gentle, location-aware self‑care guidance. We use Azure AI Foundry for conversational flows and report generation, plus Azure Custom Vision for anomaly detection. These monthly updates boost accuracy, reliability, and accessibility so your results get clearer—and your next steps feel simpler.

“We are currently developing a direct tongue detector right in the camera. It will simplify the experience and improve the quality of photographed tongues.” — Gabriela Sikorova, co-founder of MyZenCheck and the lead guarantor of the AI tongue diagnostics project

“Our customers get a basic snapshot of their well‑being and practical recommendations for better balance based on their location. Our AI Greg suggests herbs commonly available nearby—free of charge.” — Rostislav Sikora expert in AI medical diagnostics, co+founder of MyZenCheck

Important: MyZenCheck offers educational wellness insights. It is not a substitute for professional medical advice, diagnosis, or treatment.

Improved accuracy in shape, coating & color detection

- 4 specialized AI models collaborate across shape, coating, color, and anomaly checks.

- Current aggregate accuracy: 75% (internal validation).

- Why it’s better: clearer segmentation of coating vs. body, more stable color normalization, and added outlier screening via Custom Vision.

- What you’ll notice: fewer retakes, more consistent descriptors (e.g., “thin white coating,” “slightly red body,” “tooth‑mark edges”), and tighter confidence ranges.

- Better color normalization under mixed lighting

- More robust edge detection for scalloping/teeth marks

Tech stack highlights

- Conversational workflow & report assembly in Azure AI Foundry.

- Visual anomaly detection via Azure Custom Vision (trained on tongue capture artifacts like blur, glare, and framing errors).

- Privacy‑first processing with minimal data retention and anonymization where possible.

New data sources from global users (Aug 1–12, 2025)

Early‑August adoption helps us tune models for a wider range of lighting, camera types, and tongue presentations.

| Country | New images |

|---|---|

| India | 316 |

| Pakistan | 276 |

| Bangladesh | 48 |

| United States | 43 |

| Kenya | 20 |

| Indonesia | 18 |

| Nigeria | 15 |

Window: August 1–12, 2025. Counts reflect successfully analyzed, opted‑in images for model tuning.

Integration with multi‑language support

We’re actively testing Indonesian in the assistant and reports. Early users rated the experience 5/5 stars. Next up: extending translation coverage in capture guidance, instructions, and lifestyle tips.

In progress: on‑device language detection in the camera so the capture UI auto‑adapts to your preferred language before you take a photo. This should reduce glare, blur, and framing errors by giving the right instructions at the right moment.

How this benefits your health checks

- Cleaner captures → clearer insights. Better photos mean more reliable shape, coating, and color reads.

- Local, practical guidance. Recommendations are tuned to where you live—so suggestions (like hydration habits or commonly available herbs) are realistic, affordable, and safe to try.

- Gentle, step‑by‑step support. Your result explains what we saw, what it could indicate in wellness terms, and how to follow up if something feels off.

MyZenCheck is educational and complementary. If you have symptoms or concerns, please consult a qualified healthcare professional.

What’s next (roadmap)

- On‑device language detection in camera

- Expanded datasets from Africa and Southeast Asia to reduce bias and improve generalization.

- Explainability UI with clearer confidence bands and capture‑quality tips.

Try the AI Tongue Analysis Scanner

Scan your tongue, get gentle insights, and learn simple next steps—free.

Android: AI tongue diagnostics scan app

References & further reading

- Liu Q. A survey of artificial intelligence in tongue image for diagnosis (2023, open access).

- Yang L. TongueNet: multi‑modal deep learning for TCM tongue

- Yuan L. Tongue image‑based ML tool for gastric cancer diagnosis (2023).

What’s improved in August 2025?

Four coordinated models now handle shape, coating, color, and anomaly checks with an aggregate 75% internal accuracy, plus better guidance for clear photos.

How accurate is the tongue analysis now?

Our current aggregate accuracy across shape, coating, and color tasks is 75%, powered by four specialized models working together.

Where can I learn more about TCM principles behind the patterns?

See our tongue-signs explainer (e.g., pale tongue ↔ Qi/Blood deficiency; yellow coating ↔ damp-heat) for a user-friendly overview.

How should I take the best tongue photo?

Use natural light, avoid strong dyes (coffee/tea/wine) for 24 h, and keep your tongue relaxed. The app guides angle and distance and will soon auto-switch instructions to your language.